Apple analyzes user photos for child pornography

Apple presented new initiatives in the fight against child pornography, including a system for analyzing user photos. This concerns the photos that are on iCloud and it also involves the iPhone. This confirms information that was released this morning.

Analysis of photos by Apple in relation to child pornography

With iOS 15, Apple will be able to detect images known to be child sexual abuse by comparing them with those of users who are on iCloud. Apple will be responsible for reporting users who have such content to organizations that work with law enforcement.

Of course, some may find this to be a violation of privacy. But Apple ensures that its mechanism is designed with confidentiality. The analysis of photos is done directly on the iPhone, instead of taking place on servers. A comparison takes place with a database to see an image is the same as one related to child pornography. It also works if the image is black and white or has been cropped. Apple says it will then turn that database into an unreadable set of hashes stored securely on users’ devices. The hashing technology, which is called NeuralHash, analyzes an image and converts it to a unique number specific to that image.

How does the process work? Apple first receives an alert in case of images that appear to be related to child pornography. An employee of the manufacturer then checks to see if the alert is legitimate. If this is confirmed, then the employee deactivates the user’s iCloud account and sends a report to the authorities. Apple assures the passage that its system is ” very precise “.

This innovation will initially concern the United States. But Apple warns the system will land in other countries over time.

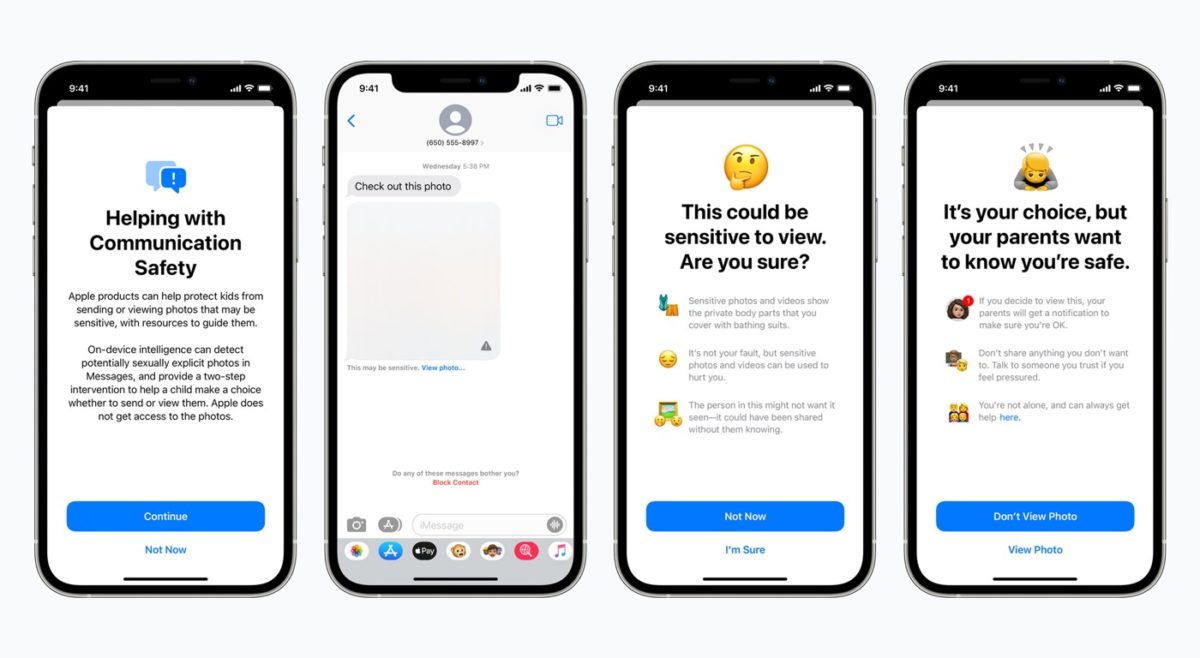

Alerts on iMessage

Another Apple initiative to fight child pornography concerns iMessage. The Messages app will display a pop-up to warn children and their parents when receiving or sending sexually explicit photos. Machine learning directly on the iPhone is used to analyze the images. If a photo is intended to be sexual, the Message application blurs it and the child receives a warning. Apple does not want him to open it. On the parents’ side, it is possible to receive a notification when the child opens the photo despite the warning or sends a sexual photo to another contact.

This will be in place with iOS 15 and macOS Monterey. Apple ensures that iMessage conversations are always encrypted, even with this sex image detection system.

Siri will offer resources

Finally, Apple will improve Siri by providing more resources to help kids and parents stay safe and get help when needed. For example, Siri can help people who want to report child pornography. The organizations that do this will be displayed in a list.

This will be in place with iOS 15, macOS Monterey, and watchOS 8.