Google Soli: the human-machine interface capable of interpreting body language

Human-machine interfaces have not progressed much since theiPhone… which was already only a “tactile” refinement of the graphical interface of the first macintosh (and prototype stations of Xerox). Admittedly, it is now possible to perform some targeted voice commands to partially control your smartphone or connected speaker, but nothing that can really replace the conventional graphical interface.

The ATAP Division (Advanced Technology and Products) from Google is working on a potentially game-changing body language analysis technology: Google Solo involves the photo sensor of the Google Pixel 4 (the technology has been removed from the Google Pixel 5) or the second generation Nest Hub as well, a capture processor called SOLI as well as AI software capable of translating body movements into control commands. Simple gestures of the hand and away from the screen allow you to turn off the alarm clock, take a snapshot, launch a piece of music or a video, etc.

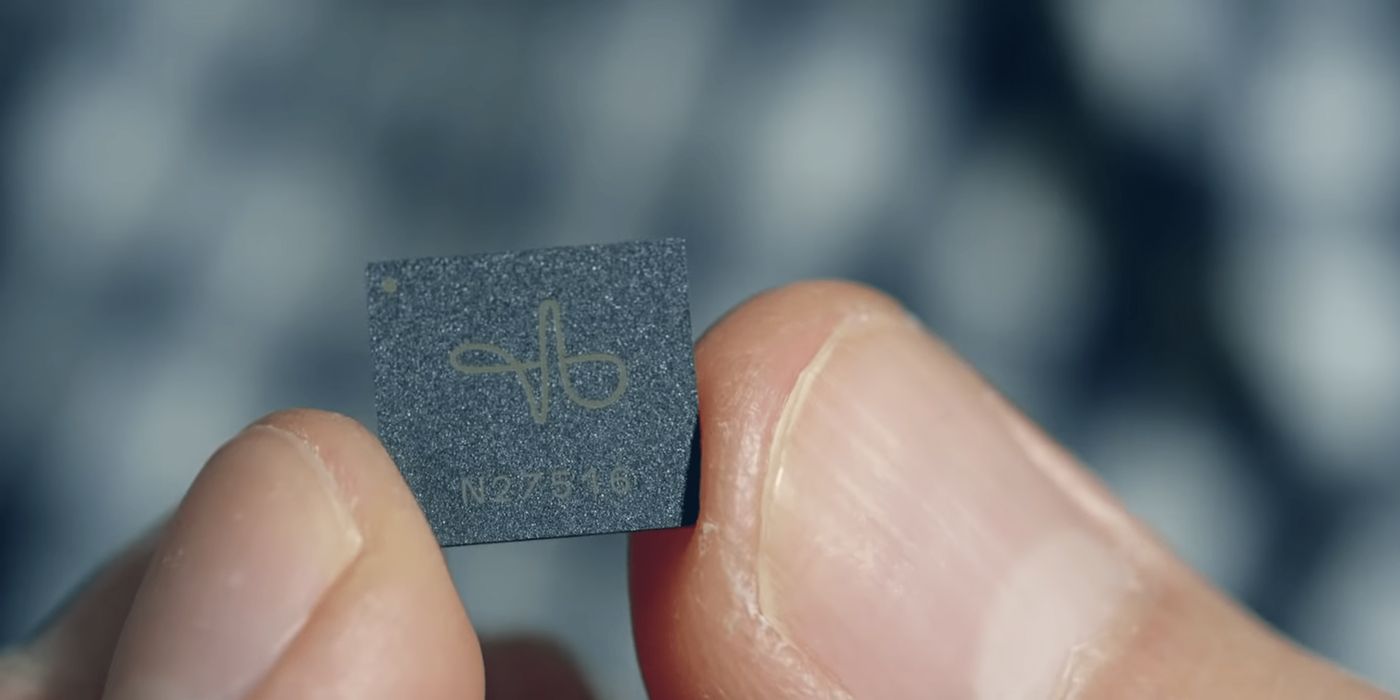

Pixel 4’s Soli chip

For the moment, it must be recognized that the technology remains very fragmented, a bit like the voice commands for the rest, but it is no longer forbidden to imagine a fusion of its voice/gestural interfaces resulting in a control interface whose the modalities would approximate natural language dialogue. As if the AI in the film Her could also “read” our moods and interpret our gestures and thus end up with an ultra-personalized man-machine interface…