Fight against pedocriminality: is Apple screwing us up?

Contents

Establishment of a CSAM analysis system to fight against child crime

In 2020, 21 million reports of abusive content relating to children’s sexuality were received by the National Center for Missing and Exploited Children (NCMEC). Of these reports, only 265 came from Apple. Far behind Facebook and its 20 million reports, the bitten apple company looks like a bad student. At first glance, the intention to improve and effectively fight against the circulation of pedophile content is therefore extremely laudable.

To achieve its goals, Apple plans to integrate the CSAM (Child sexual abuse material) analysis system into its future devices. Each time a person uploads content, including images to their iCloud, they are “chopped” using technology. NeuralHash. This means that a number is assigned to the image. Neural hashing then makes it possible to see if an image from iCloud matches one of the images in the database provided by the NCMEC to Apple, based solely on assigned numbers. If an image matches one of the images in the NCMEC database, it may be because it is moved and has nothing to do with iCloud. After a certain number of corresponding images, an (unknown) threshold can be reached. A human being will then be responsible for checking whether or not it is inappropriate content. If this is the case, a report will be sent to NCMEC who will act accordingly.

Read also: The Android / e / system: how to exclude Google from your privacy

ICloud and messages main features impacted

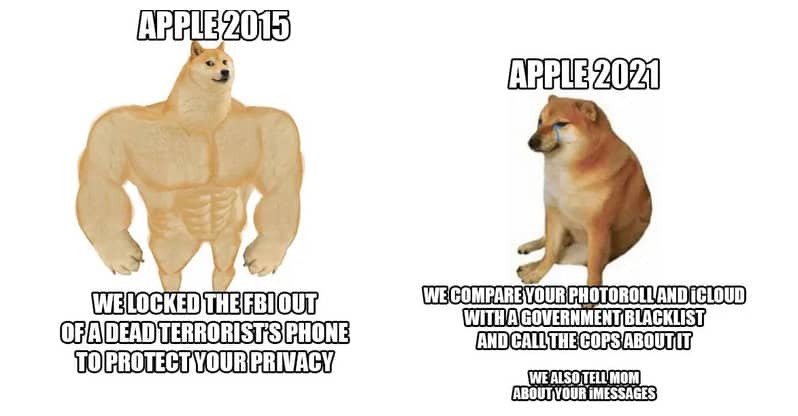

Contrary to what one might think, Apple therefore does not scan all of our photos. It is only interested in photos uploaded to iCloud Photo Library. If iCloud Photo Library is turned off, Apple will have no way to analyze photos. Then, even if iCloud is activated, Apple has no control over the content of the photos since they are converted into a sequence of numbers. Apple does not actually access content until the match threshold between the iCloud library and the NCMEC database is reached. As long as this threshold is not exceeded, Apple does not take action. Either way, if you want to prevent your photos from being scanned, it’s easy to save your photos to your Mac.

In addition to iCloud, Apple has developed new tools for messages. For minors, parents or guardians will be able to set up a parental control. The latter will offer them the possibility of receiving a notification if their child sends or opens a photo of a sexual nature. For his part, the minor will first be warned that the content is deemed unsuitable and dangerous. Then, if he agrees to view or send the photo, another screen will inform him that if he really wants to send or open this content, a notification will be sent to his parents. Attractive on paper, especially since Apple does not have access to the content of messages. But, ethically, we are entitled to wonder about potential abuses or abuses of this system.

Which devices are affected?

You wonder if by buying the next iPhone or the next iPad you are taking the risk of having your photos analyzed. The answer is yes… only if you live in United States. In Europe, data protection regulations are currently stricter. But “rest assured” it is only a matter of time before this system of analysis arrives on the old continent. It should be noted in passing that the devices concerned are the iOS 15 iPadOS15, macOS and watchOS8.

If the basic idea is enough to arouse enthusiasm, we must admit that this turn taken by Apple raises questions. Even if the analysis system remains limited, this novelty seems to open up an upsurge in digital surveillance. In addition, this kind of tool is cause for concern when one imagines what they would entail if they were used with abuse. The announcement has also made a lot of noise, revolting a lot of users. Apple’s “transparency” at least allows us to be warned. So if this bothers us, let’s be smart and careful. But beware, this kind of practice does not bother everyone. After all, what would Apple do with the family photos of millions of people? This is another debate, an increasingly topical debate.