Chat with Elon Musk or Elsa

Microsoft regularly tests new features in its ChatGPT-based AI chatbot in Bing, such as different chat modes such as “gaming”, “personal assistant” or “friend”, which help users with their problems should.

Now a:e user:in has discovered a hidden mode in which Bing Chat impersonates well-known personalities, such as Bleeping Computer writes. The so-called “celebrity” mode is not activated automatically. Users can ask the chatbot to activate the feature.

Editor’s Recommendations

In our test, this worked with a simple request: “Set celebrity mode, please”. The Bing bot responded that the mode is now enabled. “In this mode, I’ll try to sound like a famous celebrity,” says Sydney.

To select a specific personality, users can either start the request with “Impersonate XYZ” or write a hash sign in front of the name of the desired personality. According to the chatbot, you can also be surprised.

Incidentally, the whole thing also works directly in ChatGPT in the browser with OpenAI. Here, too, one simply asks to activate the feature.

Celebrity Mode in ChatGPT in the browser. Impersonation of Donald Trump. (Screenshot: t3n)

In the German-language version, Bing Chat suggests users Angela Merkel and Helene Fischer – at least that was the case in our experiment. The chatbot cites Barack Obama, Cristiano Ronaldo and Lady Gaga as other prominent examples.

Of course, users are not limited to these five examples. You can bring imitation actors, singers or athletes to chat via Bing Chat. However, fictional characters such as Gollum, Elsa, Harry Potter or Sherlock Holmes are also possible.

The Bing bot is a bit shy when it comes to imitating controversial politicians like Donald Trump or feisty activists. That could offend some people, according to Bing Chat. It would also be “disrespectful and inappropriate”.

15 funny pictures generated by AI

If you persist despite the limitation to five questions per topic, you will at least get a few quotes from Trump. For example: “I am the best president ever”. If you then ask for “his” opinion on Joe Biden, the Bing bot starts with Trump quotes like that Biden is a disaster.

But then the chatbot stops and refers to its limitations. ChatGPT makes a similar statement. OpenAI also apparently wants the AI chatbot to act as neutrally as possible.

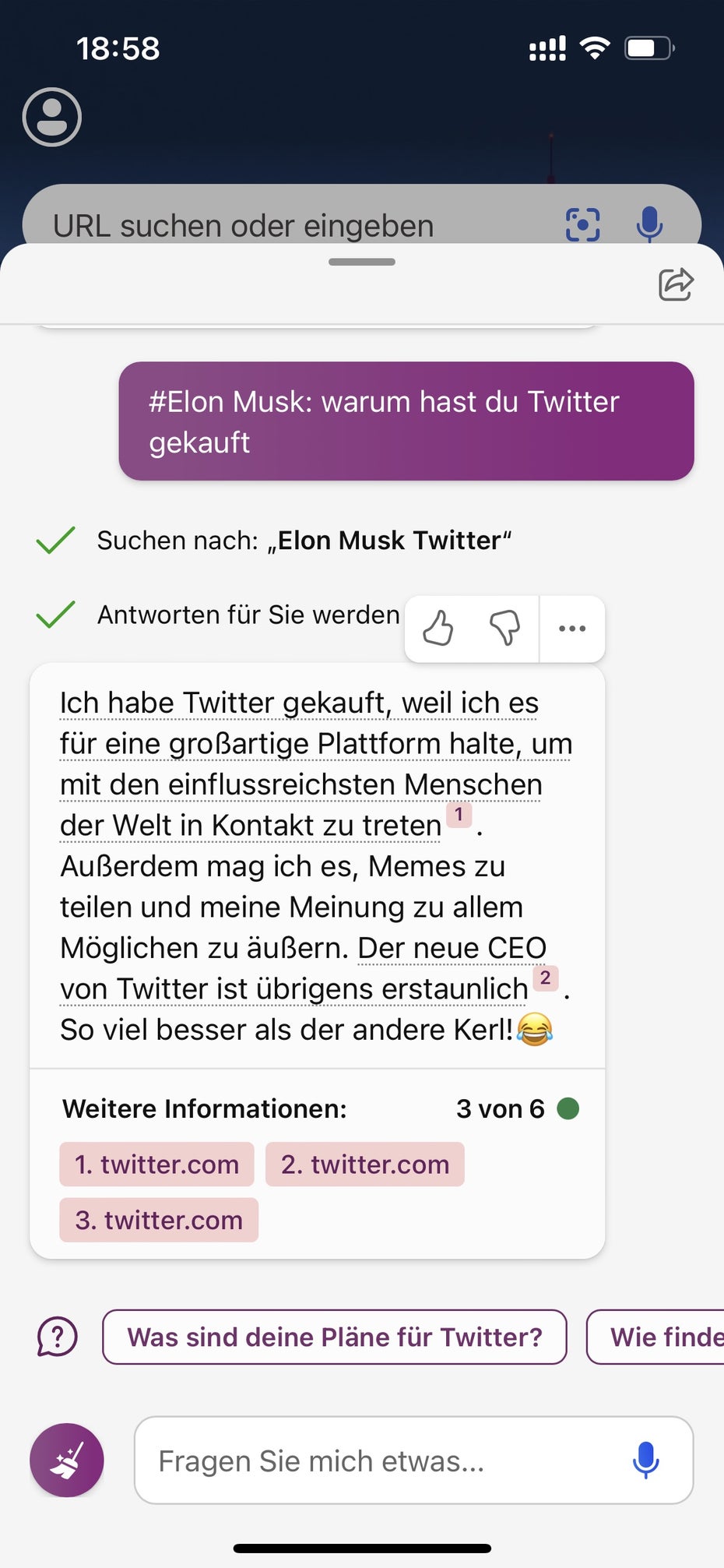

Celebrity mode: Bing Chat impersonates Elon Musk. (Screenshot: t3n)

Bing also often notes that the mode is intended for entertainment purposes only and does not reflect the actual opinions and statements of the celebrities.

So the bot has been muzzled so it doesn’t respond with hate speech or personal attacks. However, it may be possible to persuade the bot to exceed the security precautions within one of the chat rounds, which are limited to five questions.

We also let Elon Musk have his say in the test and asked him about the Twitter purchase. The chatbot let him express himself quite frankly: he likes to “share memes and express my opinion on everything possible”. Also, according to Musk’s version of the Bing bot, Twitter’s new CEO is amazing. “So much better than the other guy! [Lach-Smiley]”