So with AI, Facebook ensures a secure advertising environment

display

Social networks are great opportunities for advertisers, but hate postings and rampant misinformation can tarnish the user experience. Facebook cleans up and supports its auditors with an effective tool: artificial intelligence.

“Is the advertisement placed securely with you? We don’t want to appear together with weird things. ”This is what some advertisers ask when they are looking for a suitable medium for their offers. Brand safety is a central quality requirement for marketing: Nobody wants to see their own brand associated with harmful content. And in the age of fragmented web content, the concern about a secure advertising environment is even greater: Images, texts, videos and ads from a dozen sources can be imported and displayed side by side on a single website.

Viewed in this way, social networks such as Facebook and Instagram are the most diverse possible environment. Everyone with Internet access – and there are some more and more worldwide – Contributes content and can frame an advertisement that appears there. The network is the place where people get information and exchange ideas, meet friends, share passions and interact. It is these strengths that make Facebook and Instagram popular platforms for advertisers.

Contents

What can be done against hate postings and false information?

But wherever people are, their problems do not stay away. In addition to useful and friendly posts, hatred, discrimination and misinformation appear. This harmful content not only casts a shadow over advertising, it also threatens productive and helpful communication for all users that Facebook wants to promote. The podcast discusses the extent of hate speech on the Internet and at which points there is still a need for action on the part of platform operators, legislators and the judiciary The Facebook briefing among others with the member of the Bundestag Renate Künast.

Harmful posts cloud the social media experience. (Photo: Shutterstock / GaudiLab)

Have with their community standards Facebook and Instagram created a framework to protect users and to keep harmful content away from the platforms. In doing so, they follow the strategy “Remove, reduce, inform”. Clearly harmful content that contradicts the user agreement will be removed. Problematic content that is within the boundaries of the agreement is reduced. Users are provided with contextual information so that they can decide for themselves whether they really want to click, read or share something.

Artificial intelligence helps with testing

The enormous number of contributions that have to be checked every day cannot be managed with human labor alone. To do this, Facebook uses tools with artificial intelligence. Complex AI networks are trained to support examiners.

Insults and inflammatory messages are often clearly and quickly recognized by the AI. Difficulties are caused by irony and seemingly harmless images that are discriminatory depending on the cultural context. But artificial intelligence just keeps getting better. “The more subtext and context play a role, the greater the technical challenges,” says Ram Ramanathan, Director of the AI Product Management Team at Facebook. “This is exactly why the big leap in the further development of AI has been so significant in recent years: AI was able to learn what makes such content.”

AI helps check billions of postings. (Image: Shutterstock / metamorworks).

Over 96 percent of hate messages recognized

The current one also shows the learning success Community Standard Enforcement Report to. Of the 25.2 million hate speech content that Facebook removed in the first quarter of 2021, 96.8 percent were proactively detected by AI. During the same period, the spread of hate speech has been greatly reduced. On Facebook, only 6 out of 10,000 content views were hate comments. This metric is especially important for advertisers who want to know what the potential risk of an ad being displayed along with malicious content.

Facebook has also made great strides in the use of artificial intelligence to detect illegal content and remove it more quickly before users report it. The new systems are already able to recognize hate speech in 45 languages on all of our platforms. This not only makes the test efficient, but also ensures that testers do not have to expose themselves to the most harmful content.

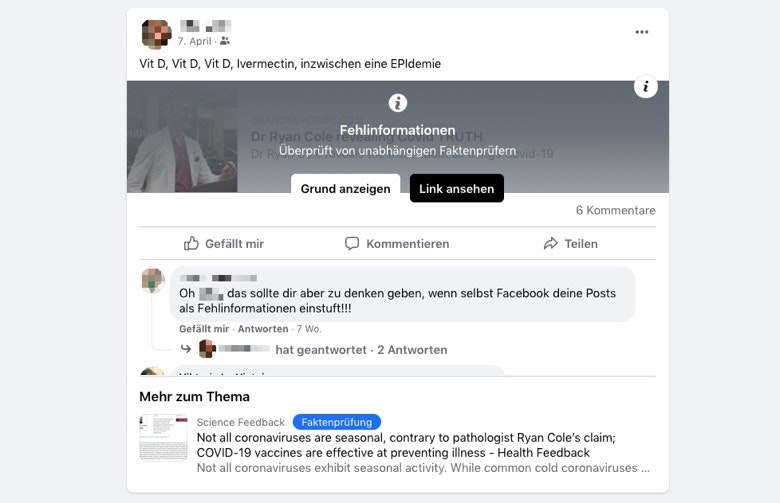

Incorrect information is flagged

If the AI models have identified potential misinformation, this will be displayed to the independent fact checker under the items to be checked and evaluated. As soon as a piece of content is classified as incorrect by the fact checkers, Facebook not only reduces its circulation, but also marks it with a warning and informs people by giving them more context. Users: the checked content is still accessible, but it is displayed further down in the news feed and with a warning. Duplicates and similar versions of the article are also detected thanks to powerful models and marked accordingly.

“This method has proven to be extremely effective,” says Ramanathan. “Our internal study has shown that content with warnings about false positives was skipped and not viewed in 95 percent of cases.” Since the beginning of the pandemic, a particularly large amount of misinformation on medical topics has been shared. Facebook and Instagram have removed more than 16 million pieces of content worldwide because, according to health experts, it was proven to contain incorrect information about Covid-19 or vaccinations.

The video series offers more insights on Facebook’s action against misinformation about the Covid 19 vaccination “Let me explain”that explains complex topics of the social network in simple terms.

Fact checkers mark misinformation on Facebook. (Screenshot: t3n).

Tough nut for AI: deepfakes and hate memes

The latest challenges for AI include deepfakes and hate memes. Deepfakes are artificially altered videos and images that cannot be seen as fakes with the naked eye. Hate memes use images and text, each of which seems harmless in itself. However, when combined, they result in a hurtful or discriminatory message.

Both media types require extensive and differentiated training for artificial intelligence in their own way. In its Hateful Memes Challenge and Deepfake Challenge Facebook AI researchers compete against each other to recognize and block the contributions of other teams. The strategies developed are also intended to help combat the harmful contributions on the open stage.

Communicate securely thanks to AI

Ramanathan does not see free speech restricted by the work of the moderators and the AI, on the contrary. “We take the position that people on our platforms can openly express their opinions,” says the researcher. “However, this does not apply if you injure others or cause them harm. Companies should also be able to participate without being associated with harmful or incorrect content. ”The ultimate goal is therefore to provide a communication platform that is as secure as possible. “AI isn’t the only solution to problematic content,” says Ramanathan. “But it gives us the opportunity to react faster and more effectively than with human labor alone.”

How posts and fact checking work on Facebook is also the subject of an episode in the podcast The Facebook briefing, in which Max Biederbeck, Head of the German Fact-Checking Team at AFP, and Guido Bülow, Head of News Partnerships Central Europe at Facebook, discuss the basics of the cooperation. It’s worth listening to.

Thanks to AI support, Facebook and Instagram are platforms on which users and advertisers can communicate in a helpful and secure manner. Learn from Facebook AI Researchhow open source tools and neural networks will help create places in the future that people will enjoy being in.

Your marketing, your business boost. How you can inspire your target groups and offer successful brand experiences is shown by the contributions in the topic special of Facebook for Business. In it you read among other things: